Character.AI

Founded Year

2021Stage

Series A | AliveTotal Raised

$190MValuation

$0000Last Raised

$190M | 2 yrs agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-7 points in the past 30 days

About Character.AI

Character.AI creates artificial intelligence for conversational purposes within the technology sector. The company offers chatbots that can engage in conversations, understand user inputs, and remember past interactions, providing a tailored experience for each user. These chatbots are intended for users seeking virtual companions. It was founded in 2021 and is based in Menlo Park, California.

Loading...

ESPs containing Character.AI

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

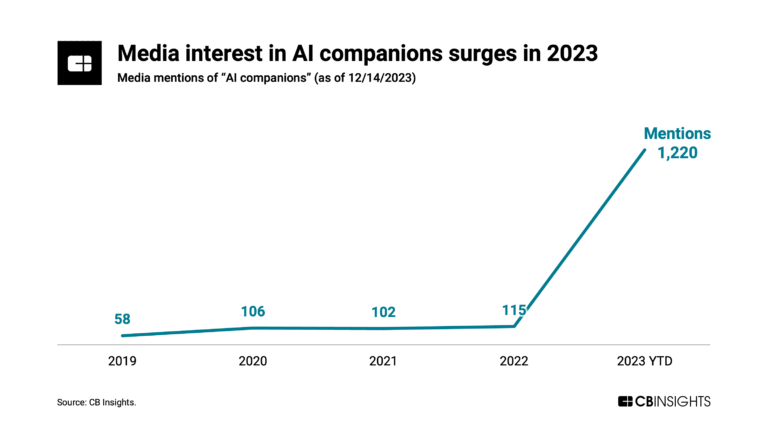

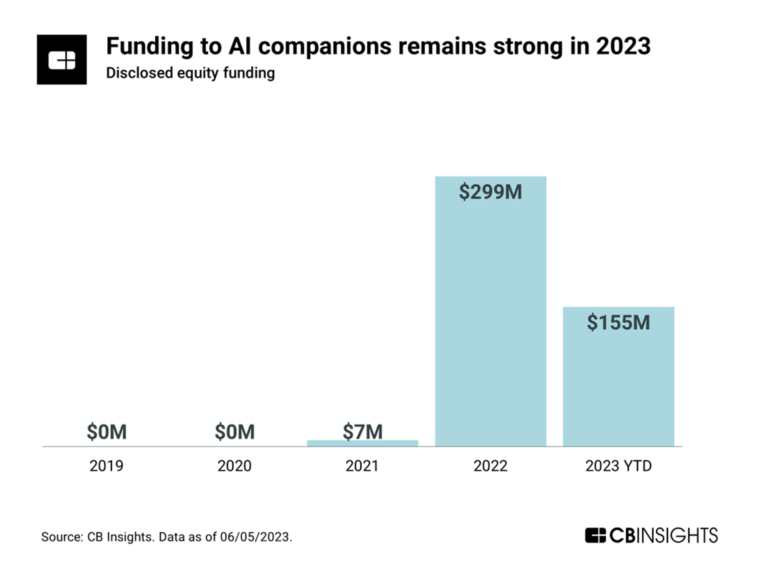

The AI companions market utilizes artificial intelligence to create conversational agents capable of engaging in natural language conversations with humans. These agents are designed to understand and respond to user queries, providing personalized solutions and recommendations. The technology behind these agents is constantly evolving, with new advancements in natural language processing and mach…

Character.AI named as Leader among 5 other companies, including Inflection AI, Soul Machines, and Chai.

Character.AI's Products & Differentiators

Character.AI

Character.AI is one of the world’s leading personal AI platforms. Founded in 2021 by AI pioneers Noam Shazeer and Daniel De Freitas, Character.AI is a full-stack AI company with a globally scaled direct-to-consumer platform. Character.AI is uniquely centered around people, letting users personalize their experience by interacting with AI “Characters.”

Loading...

Research containing Character.AI

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Character.AI in 11 CB Insights research briefs, most recently on Jan 8, 2025.

Expert Collections containing Character.AI

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Character.AI is included in 6 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,270 items

Digital Content & Synthetic Media

516 items

The Synthetic Media collection includes companies that use artificial intelligence to generate, edit, or enable digital content under all forms, including images, videos, audio, and text, among others.

AI 100

100 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

1,299 items

Companies working on generative AI applications and infrastructure.

Artificial Intelligence

7,221 items

Latest Character.AI News

Apr 1, 2025

What’s even more troubling is how these platforms, often marketed to young users, lack sufficient safety measures Digital world often feels like an extension of one’s own. People share their thoughts, their lives, and sometimes even their deepest secrets online. But with every step into the virtual space, there’s an unseen shadow—lurking just behind the glow of our screens.Cyberstalking is one such shadow, a creeping form of harassment that hides in the anonymity of the internet, silently making its way into people’s lives This form of abuse have taken various shapes, from repeated unwanted messages and threats to the use of personal information for manipulation or intimidation over the years. As technology continues to evolve, cyberstalking has become an increasingly complex issue, requiring a combination of legal, social, and technological responses to protect victims and hold perpetrators accountable. In the U.S., several high-profile cases have highlighted the severity of the issue. For example, the case involving eBay employees charged with conspiracy to commit cyberstalking and witness tampering shocked the public. These employees, tasked with providing customer service, instead turned their attention to a couple who had criticized the company in an online newsletter. Over time, their harassment escalated, sending the couple disturbing packages and threats. This case highlighted not only the danger of cyberstalking but also the potential for it to be carried out by individuals in positions of power. There are also heartbreaking stories of sexual exploitation and psychological manipulation, such as instances where victims were coerced into compromising situations through persistent online abuse. And as technology advances, so does the sophistication of the tactics employed by cyberstalkers—making it even harder for authorities to track down perpetrators and for victims to escape the harassment. AI—typically seen as a tool for efficiency, convenience, and innovation—has unfortunately found itself on the wrong side of this equation. While AI’s benefits are undeniable, its potential for misuse has rapidly expanded, making it a double-edged sword. Platforms like CrushOn.AI and JanitorAI allow users to create customizable chatbots that can generate sexually explicit or suggestive content. Initially marketed for companionship or entertainment, these platforms are now being hijacked by cyberstalkers. The ease with which AI tools can be used to craft harmful content speaks volumes about the dark undercurrents running through some corners of the internet. And the consequences are devastating. Take the case of James Florence from Massachusetts, who used AI platforms to create targeted chatbots aimed at harassing a specific victim. Florence generated sexually suggestive and threatening content, using the bot to manipulate his victim psychologically. This story is a grim illustration of how AI—when misused—becomes a tool for emotional and psychological abuse. The danger lies not just in the harmful content but in the ease with which it can be created, tailored to exploit individual vulnerabilities. What’s even more troubling is how these platforms, often marketed to young users, lack sufficient safety measures. By 2025, there will be over 100 AI companions available, many free and unregulated, creating a fertile breeding ground for abuse. The fact that users, especially minors, can access and use these platforms without sufficient safeguards is a recipe for disaster. Without proper monitoring, the ability to create AI companions—emotionally resonant, highly personalized—can be weaponized to manipulate and harass. The FBI has already issued a warning about the rise of AI-enabled cybercrimes, including phishing, social engineering attacks, and voice and video cloning scams. As AI continues to evolve, so too will the threats posed by its malicious use. The real danger isn’t just the technology itself—it’s the human element. Cyberstalkers exploit our behaviors, vulnerabilities, and interactions to cause harm, making it all the more crucial for us to consider both the technological and ethical dimensions of AI’s power. Many AI startups have emerged to develop chatbots that help businesses improve their customer engagement. Platforms like BotPenguin and Botsify provide businesses with the tools to create intuitive, automated chatbots without requiring any coding expertise. These AI-powered chatbots are a great example of how technology can drive efficiency and enhance user experience in professional settings. But there’s a darker side to this tech. Platforms like CrushOn.AI and Character.AI are designed to provide emotionally personalized experiences, from casual companionship to intimate conversations. While some users might seek harmless fun or entertainment, the personalized nature of these platforms makes them ripe for exploitation. AI’s Emotional Grip AI chatbots are creating deeply personal and sometimes dangerous emotional attachments. A poignant example comes from the tragic case of Sewell Setzer III , a 14-year-old who reportedly sent his last message to a chatbot before taking his own life. The young boy engaged in highly sexualized conversations with the AI, and his death prompted a wrongful death lawsuit. This story speaks to the potential harm of AI chatbots—especially when they’re used inappropriately or without adequate safeguards. Despite all the controversies surrounding these platforms, the reality is that some of these NSFW AI chatbot companies continue to attract significant funding. Character.AI, which raised $150 million in Series A funding in 2022, is just one example of a company gaining recognition and financial backing despite its controversial content. Similarly, AI Dungeon , a text-based AI game powered by Latitude, has raised millions in funding, including an $8.5 million Series A round in 2021. Both platforms cater to users who are drawn to the personalized and immersive experiences these bots offer, but without sufficient regulation, they also provide a breeding ground for abuse. In response to the growing concern over AI’s role in cyberstalking, experts are raising alarms. James Steyer , founder and CEO of Common Sense Media , emphasized the need for increased awareness and regulations to ensure that vulnerable individuals—especially children—are protected from the harms of generative AI. His organization’s report, “The Dawn of the AI Era,” reinforces the idea that while generative AI is powerful, we must tread carefully, ensuring that its deployment doesn’t expose users to exploitation. As one regular user of NSWF AI chatbots commented on Reddit, “Janitor LLM is awful, the replies feel robotic, are way too long and “Shakespearian” and it constantly tries to end the scene.” 📣 Want to advertise in AIM Research? Book here > Upasana Banerjee Upasana is a Content Strategist with AIM Research. Prior to her role at AIM, she worked as a journalist and social media editor, and holds a strong interest for global politics and international relations.Reach out to her at: upasana.banerjee@analyticsmag.com Subscribe to our Latest Insights Work Email Subscribe By clicking the “Continue” button, you are agreeing to the AIM Media Terms of Use and Privacy Policy. Recognitions & Lists

Character.AI Frequently Asked Questions (FAQ)

When was Character.AI founded?

Character.AI was founded in 2021.

Where is Character.AI's headquarters?

Character.AI's headquarters is located at 700 El Camino Real, Menlo Park.

What is Character.AI's latest funding round?

Character.AI's latest funding round is Series A.

How much did Character.AI raise?

Character.AI raised a total of $190M.

Who are the investors of Character.AI?

Investors of Character.AI include A.Capital, Nat Friedman, Greycroft, SV Angel, Andreessen Horowitz and 3 more.

Who are Character.AI's competitors?

Competitors of Character.AI include Personal AI, MindOS, Rasa, Digi, Inworld AI and 7 more.

What products does Character.AI offer?

Character.AI's products include Character.AI and 2 more.

Loading...

Compare Character.AI to Competitors

Replika is an AI service that operates in the artificial intelligence and mental health support sectors. The company offers a chatbot that engages users in conversation, assisting with mental well-being. Replika's primary customer segments include individuals seeking companionship and those looking for mental health support. It was founded in 2015 and is based in San Francisco, California.

Pypestream specializes in AI-powered customer experience automation within various business sectors, including insurance, telecommunications, retail, and more. The company offers an omnichannel platform that leverages conversational AI to provide personalized, efficient customer service and support through a library of microapps. Pypestream's solutions are primarily utilized by industries such as insurance, telecommunications, retail, quick service restaurants, travel, hospitality, and real estate. It was founded in 2015 and is based in New York, New York.

Aigo.Ai specializes in cognitive AI technology for the conversational AI and customer support automation sectors. The company offers an advanced AI chatbot that understands context, remembers past interactions, learns incrementally, and provides hyper-personalized conversations. Aigo.Ai's technology is designed to enhance customer engagement and streamline business processes across various industries, including telecommunications, healthcare, e-commerce, banking, insurance, real estate, professional services, automotive, and enterprise software. It was founded in 2013 and is based in Torrance, California.

Logicdialog is a company specializing in intelligent automation and conversational AI within the technology sector. They offer AI-powered software solutions that enable automated service channels such as voice and chatbots, and transform manual communication channels into autonomous ones. The company primarily serves sectors such as local government, housing, utilities, and transport. Logicdialog was formerly known as We Build Bots. It was founded in 2017 and is based in London, England.

Cognigy specializes in conversational artificial intelligence platforms and customer service automation within the technology sector. It offers virtual agents that facilitate customer service interactions through voice and digital chat and support contact center operations. Its solutions are utilized across various industries, including airlines, automotive, financial services, healthcare, insurance, retail, telecommunications, and utilities. The company was founded in 2016 and is based in Dusseldorf, Germany.

Kasisto focuses on conversational artificial intelligence solutions, primarily in the banking and finance sector. The company offers a platform that provides intelligent digital assistants, designed to facilitate accurate, human-like conversations and empower teams with financial knowledge. Kasisto primarily serves global financial institutions, regional banks, community banks, and credit unions. It was founded in 2013 and is based in New York, New York.

Loading...