Groq

Founded Year

2016Stage

Unattributed | AliveTotal Raised

$2.503BLast Raised

$1.5B | 2 mos agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

+97 points in the past 30 days

About Groq

Groq specializes operates as an AI inference technology within the semiconductor and cloud computing sectors. The company provides computation services for AI models, ensuring compatibility and efficiency for various applications. Groq's products are designed for both cloud and on-premises AI solutions. It was founded in 2016 and is based in Mountain View, California.

Loading...

Groq's Product Videos

ESPs containing Groq

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The AI inference processors market develops specialized chips for efficiently executing pre-trained AI models in real-time applications. These processors prioritize low latency and energy efficiency, making them essential for tasks such as image recognition, natural language processing, and recommendation systems in devices such as smartphones, robotics, and autonomous vehicles. The market is expa…

Groq named as Leader among 15 other companies, including Advanced Micro Devices, Samsung, and IBM.

Groq's Products & Differentiators

GroqRack™

For data center deployments, GroqRack provides an extensible accelerator network. Combining the power of an eight GroqNode™ set, GroqRack features up to 64 interconnected chips. The result is a deterministic network with an end-to-end latency of only 1.6µs for a single rack, ideal for massive workloads and designed to scale out to an entire data center.

Loading...

Research containing Groq

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Groq in 5 CB Insights research briefs, most recently on Jan 28, 2025.

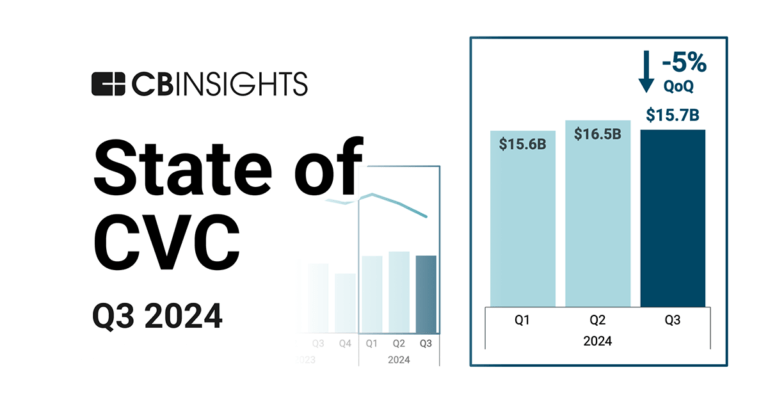

Oct 31, 2024 report

State of CVC Q3’24 Report

Sep 13, 2024

The AI computing hardware market mapExpert Collections containing Groq

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Groq is included in 4 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,270 items

Semiconductors, Chips, and Advanced Electronics

7,329 items

Companies in the semiconductors & HPC space, including integrated device manufacturers (IDMs), fabless firms, semiconductor production equipment manufacturers, electronic design automation (EDA), advanced semiconductor material companies, and more

AI 100 (2024)

100 items

Artificial Intelligence

7,221 items

Groq Patents

Groq has filed 85 patents.

The 3 most popular patent topics include:

- parallel computing

- computer memory

- instruction set architectures

Application Date | Grant Date | Title | Related Topics | Status |

|---|---|---|---|---|

12/12/2022 | 3/25/2025 | Computer memory, Parallel computing, Video cards, Natural language processing, Memory processes | Grant |

Application Date | 12/12/2022 |

|---|---|

Grant Date | 3/25/2025 |

Title | |

Related Topics | Computer memory, Parallel computing, Video cards, Natural language processing, Memory processes |

Status | Grant |

Latest Groq News

Mar 28, 2025

Too Long; Didn't Read The latest empathy benchmarks reveal Groq's DeepSeek model (deepseek-r1-distill-llama-70b-specdec) offers the best balance of empathy, speed, and cost. While Claude Sonnet 3.5 and ChatGPT 4o score slightly higher on empathy (0.98), their 7+ second response times are problematic for real-time interactions. DeepSeek delivers 0.90 empathy with blazing 1.6s responses at less than half the cost. User testing confirms DeepSeek and Claude responses are nearly indistinguishable, with ChatGPT feeling somewhat colder. Simply instructing LLMs to be empathetic proves ineffective, and commercial guardrails may actually hinder empathetic responses. Company Mentioned This is my third set of benchmarks on empathetic AI. Since the last round of benchmarks , DeepSeek, Gemini Flash 2.0, Claude Sonnet 3.7, and OpenAI ChatGPT o3-mini have arrived on the scene. The new value leader for empathy is a Deepseek derivative, Groq deepseek-r1-distill-llama-70b-specdec. DeepSeek itself was not included in the benchmarks because it had erratic response times that frequently exceeded 10s and sometimes simply erred. In this round of benchmarks, I have included response time and costs. An academic study I have been doing, plus common sense, seem to indicate that slow responses will have a negative impact on perceived empathy. In fact, anything over 3 or 4 seconds is probably bad from a chat perspective. Furthermore, LLM costs are now all over the map and are certainly relevant to making product management decisions. As the table below shows, if anything, more expensive models are less empathetic! For those unfamiliar with my previous benchmarks, they are driven by well-established cognitive assessments coupled with the use of an AI, Emy, specifically designed to be empathetic without being trained against, prompted, or RAG-assisted with questions from the assessments. As I have mentioned in previous articles , empathy scores are not the only success measure. The actual quality of user interactions needs to be taken into account. This being said, Claude Sonnet 3.5 and ChatGPT 4o, with 0.98 applied empathy scores, appear to present the most potential for generating empathetic content; however, their speeds at 7s+ are marginal, while Groq deepseek-r1-distill-llama-70b-specdec with a an empathy score of 0.90 responds in a blazing 1.6s and is less than 50% of the cost! Even if you use Claude with boosted speeds from an alternate provider other than Anthropic, e.g., Amazon, it won’t come close to a 2s response time. My review of actual chat dialogues, coupled with testing by independent users, has shown Claude Sonnet and Groq distilled DeepSeek responses are almost indistiniguishable, with Claude feeling just a little warmer and softer. ChatGPT 4o responses consistently read as a little cold or artificial and are rated lower by users. Gemini Pro 1.5 may also be a reasonable choice with a score of 0.85 and a very low cost. Gemini 2.0 Pro (experimental) has gone down in empathy. However, I have found the chat responses from all Gemini models a bit mechanical. I have not tested Gemini with an end-user population. I continue to find that simply telling an LLM to be empathetic has little or no positive impact on its empathy scores. My research shows that aggressive prompting will work in some cases, but for many models, it is strictly the nature of the end user engagement through the current chat that seems to tip the scales to empathy. In these cases, the need for empathy must be quite clear and not “aged out” in the conversation, or the LLMs fall into the systematic fix the problem/find a solution mode. Through work with several open-source models, it has also become evident that the guardrails required of commercial models may get in the way of empathy. Working with less constrained open-source models, there seems to be some correlation between an LLM’s “belief” that it exists as some kind of distinct “real” entity and its ability to align its outputs to those perceived as empathetic by users. The guardrails of commercial models discourage the LLMs from considering themselves distinct “real” entities. Response Time is the average response time for any single test when the Emy AI is used. The Token In and Token Out are the total tokens for all tests when the Emy AI is used. Pricing for Groq deepseek-r1-distill-llama-70b-specdec was not yet available when this article was published; the pricing for the versatile model was used. Pricing for Gemini Flash 1.5 is for small queries, larger ones cost double. Pricing for Gemini Pro 2.5 (experimental) was not yet published when this article was written. Major thinking models missing from the analysis, e.g., Gemini 2.5 Pro, are too slow for any kind of real-time empathetic interaction, and some basic testing shows they are no better and often worse from a formal testing perspective. This is not to say they couldn’t be used for generating empathetic content for other purposes … perhaps Dear John letters ;-). I’ll be back with more benchmarks in Q3. Thanks for reading! LLM

Groq Frequently Asked Questions (FAQ)

When was Groq founded?

Groq was founded in 2016.

Where is Groq's headquarters?

Groq's headquarters is located at 301 Castro Street, Mountain View.

What is Groq's latest funding round?

Groq's latest funding round is Unattributed.

How much did Groq raise?

Groq raised a total of $2.503B.

Who are the investors of Groq?

Investors of Groq include Kingdom of Saudi Arabia, KDDI, BlackRock, Type One Ventures, Cisco and 19 more.

Who are Groq's competitors?

Competitors of Groq include Ampere, Blaize, Furiosa AI, Tenstorrent, NeuReality and 7 more.

What products does Groq offer?

Groq's products include GroqRack™ and 4 more.

Loading...

Compare Groq to Competitors

Cerebras focuses on artificial intelligence (AI) acceleration through its development of wafer-scale processors and supercomputers for different sectors. The company provides computing solutions that support deep learning, natural language processing, and other AI workloads. Cerebras serves industries including healthcare, scientific computing, and financial services with its AI supercomputers and model training services. It was founded in 2016 and is based in Sunnyvale, California.

Tenstorrent is a computing company specializing in hardware focused on artificial intelligence (AI) within the technology sector. The company offers computing systems for the development and testing of AI models, including desktop workstations and rack-mounted servers powered by its Wormhole processors. Tenstorrent also provides an open-source software platform, TT-Metalium, for customers to customize and run AI models. It was founded in 2016 and is based in Toronto, Canada.

Wave Computing focuses on AI-native dataflow technology and processor architecture in the technology sector. The company provides products and solutions for deep learning and artificial intelligence applications, applicable to devices from edge to datacenter environments. Wave Computing's MIPS division offers licensing for processor architecture and core intellectual property. It is based in Campbell, California.

Mythic is an analog computing company that specializes in AI acceleration technology. Its products include the M1076 Analog Matrix Processor and M.2 key cards, which provide power-efficient AI inference for edge devices and servers. Mythic primarily serves sectors that require real-time analytics and data throughput, such as smarter cities and spaces, drones and aerospace, and AR/VR applications. Mythic was formerly known as Isocline Engineering. It was founded in 2012 and is based in Austin, Texas.

Westwell Lab specializes in autonomous driving solutions and operates within the bulk logistics industry. The company integrates artificial intelligence with new energy sources to improve operations in global logistics. Westwell Lab serves sectors such as seaports, railway hubs, dry ports, airports, and manufacturing facilities. It was founded in 2015 and is based in Shanghai, Shanghai.

ChipIntelli operates in the intelligent voice chip industry and focuses on providing solutions for more natural, simple, and smart human-machine interactions. The company offers a range of intelligent voice chips and solutions that cater to various applications, including offline voice recognition and voice-enabled smart devices. ChipIntelli's products are primarily used in the smart home appliances, smart lighting, smart automotive, and smart education/entertainment sectors. It was founded in 2015 and is based in Chengdu, China.

Loading...