With industry leaders divided in their approaches, we look at the winners and losers in the open-source vs. closed-source landscape and what enterprises need to think about next for adoption.

This is part 1 of 2 in our series on the generative AI divide. In part 2, we will cover considerations for enterprise adoption of open & closed models.

The divide between open-source and closed-source AI models is reshaping tech industry dynamics.

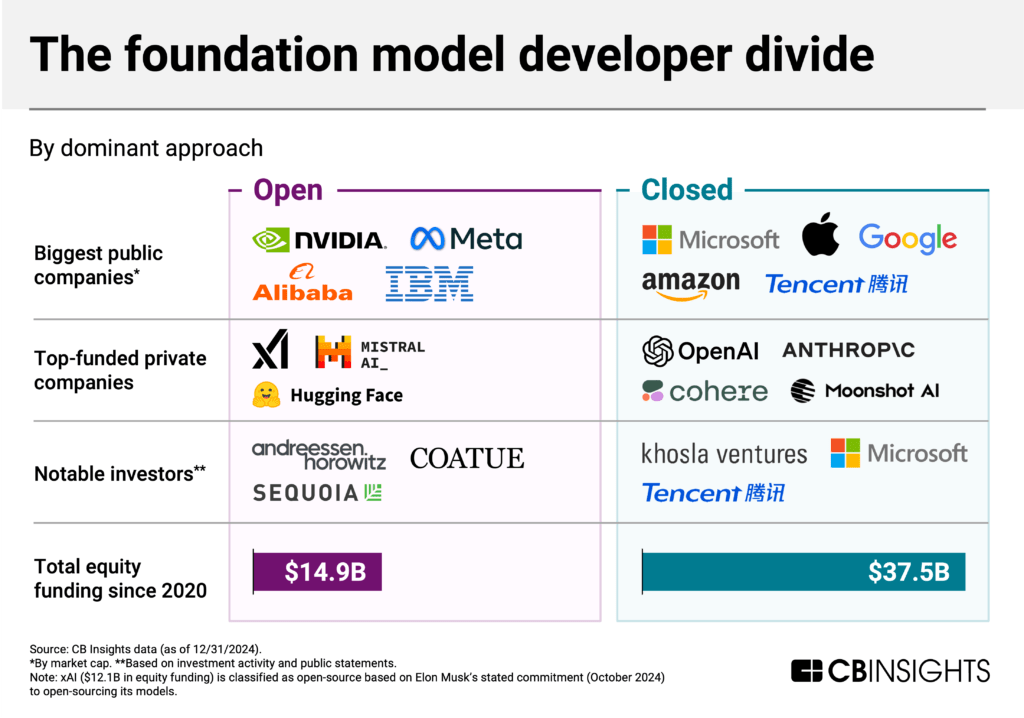

Tech leaders have staked out clear positions: Meta and xAI are open-sourcing models like Llama 3.1 and Grok-1, while Google and OpenAI have largely walled off their systems. Investment flows are also split between both approaches. Since 2020, private open-source AI model developers have attracted $14.9B in venture funding, while closed-source developers have secured $37.5B — reflecting different bets on how AI innovation will unfold.

The core difference lies in access: closed-source approaches keep model details and weights proprietary, while open-source development makes these elements available so models can be more freely studied, run, and adapted.

Companies building generative AI applications must understand this evolving landscape as it has crucial implications for the infrastructure they adopt. Based on current trends, we expect:

- Consolidation around frontier models: Closed-source models from players like OpenAI, Anthropic, and Google will dominate the market. Only tech giants like Meta, Nvidia, and Alibaba are likely to sustain the costs of developing open-source models that can compete on performance with proprietary ones. Frontier model training costs are growing 2.4x annually, driven by hardware, staffing, and energy needs, according to Epoch AI.

- Revenue and investment gaps threaten open-source model developers’ viability: While burning cash, closed-source leaders like Anthropic and OpenAI lead the private market in funding, revenue, and commercial traction. Open-source developers face similar costs but struggle to generate revenue or attract capital investment ($14.9B vs. closed-source’s $37.5B since 2020). This suggests they will move to commercialize their closed models (e.g., Mistral AI) and/or pivot to smaller, specialized offerings (e.g., Aleph Alpha).

- Smaller models drive open-source adoption: Industry leaders, alongside a range of smaller players, are releasing smaller, specialized open-source models, as evidenced by Microsoft‘s Phi, Google’s Gemma, and Apple‘s OpenELM. This suggests a two-tier market for enterprises evaluating the landscape: closed-source frontier models for the most sophisticated applications and open-source smaller models for edge and specialized use cases.

Below, we use CB Insights data to map out the open-source and closed-source AI landscape. Our analysis focuses on foundation models — the powerful, general-purpose AI systems that form a critical infrastructure layer.

CB Insights customers can track every company mentioned in this analysis using this search. We used the Generative AI — large language model (LLM) developers and Generative AI — image generation market profiles to establish the private market landscape, focusing on companies that have received funding and are developing foundation models.

Table of contents

Consolidation around frontier models

- Industry leaders are divided in their approaches

- Closed-source developers lead the private market in equity funding

- Performance gaps converge, with largest companies’ models topping leaderboard

Revenue and investment gaps threaten open-source model developers’ viability

- OpenAI dominates LLM adoption and revenue, followed by Anthropic

- Open-source’s path to revenue remains unclear

- Investors hedge their bets

Smaller models drive open-source adoption

- A wave of smaller foundation model players will move away from frontier model development

- Market bifurcation accelerates

Consolidation around frontier models

Industry leaders are divided in their approaches

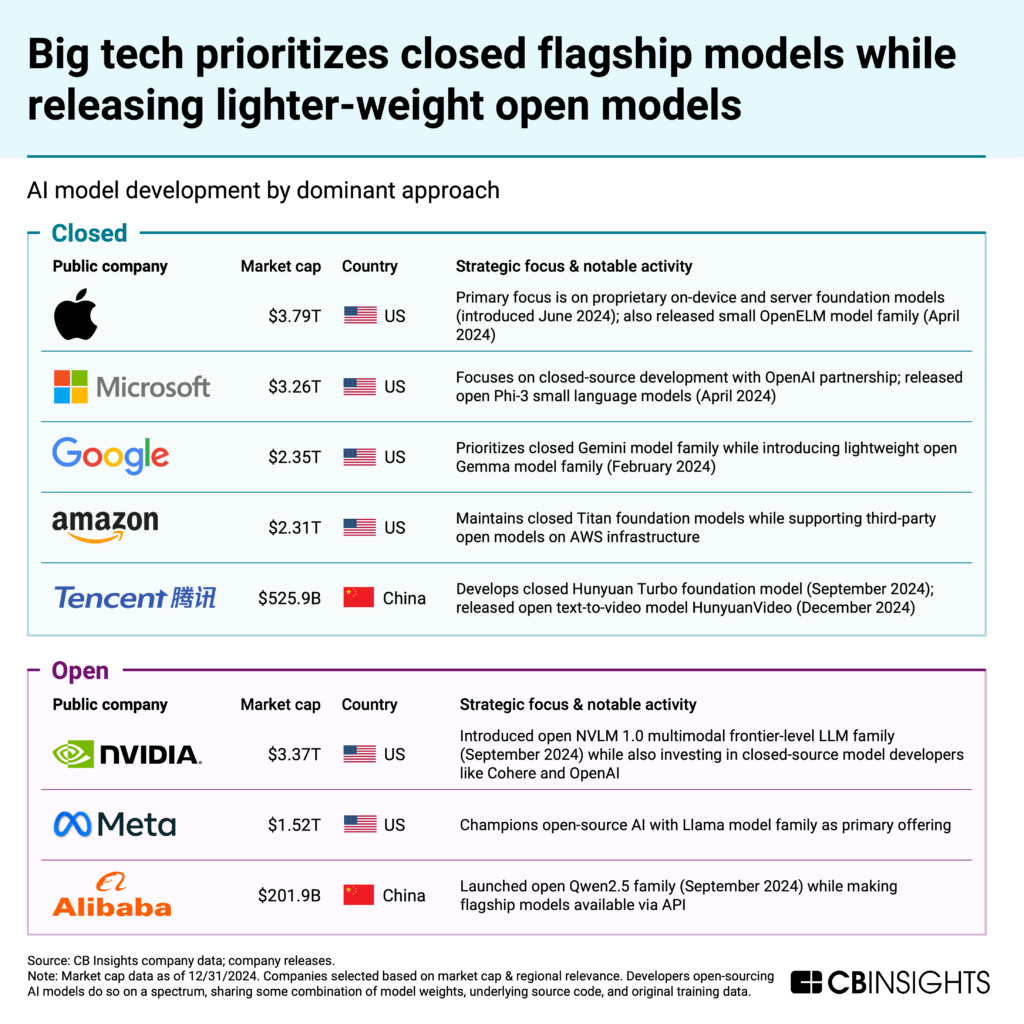

Many big tech companies — like Google and Apple — are releasing a combination of open and closed models, typically keeping their flagship models proprietary while releasing lighter-weight open models as an extension of their research efforts.

Meta and Nvidia, meanwhile, are also open-sourcing flagship models.

Note: When developers “open-source” AI models, they do so on a spectrum, publicly disclosing some combination or element of the: model weights (the learned parameters of a neural network, crucial for the model’s performance and capabilities as they encapsulate the knowledge acquired during training), underlying source code, and original training data. Open-sourcing may also involve licensing the model for free commercial use.

Open-source proponents are preparing for an open-source future

Meta CEO Mark Zuckerberg wrote in July that “Meta is committed to open source AI,” with the belief that an open ecosystem will eventually become the standard. On earnings calls, Meta is the most active big tech company in terms of open-source mentions.

At the same time, Zuckerberg acknowledged in April on Dwarkesh Patel’s podcast that the company will only continue open-sourcing “as long as it’s helping us.”

In July 2024, Meta released the model weights for its latest Llama model family so developers can fine-tune the model (train it on custom data). However, the source code and model architecture remain unavailable, limiting full modification or analysis. Meanwhile, Nvidia released both the model weights and training code for its NVLM 1.0 family of large multimodal language models in September 2024.

Closed-source proponents view revenue as crucial for top resources and talent

For example, Baidu CEO Robin Li said in an internal memo that open-source models “make little sense.” From a business perspective, he noted, “Being closed source allows us to make money, and only by making money can we attract computational resources and talent.”

Safety remains central to the debate

Critics of open-source AI models fear they will be misused by malicious actors to access harmful information (like how to build a bomb or write code for a cyber attack). They also raise national security concerns, with critics suggesting foreign actors’ ability to use open-source models to advance military applications (like weapons systems and intelligence tech) will undermine strategic advantages held by countries that currently lead in AI development.

Closed models use techniques like Reinforcement Learning by Human Feedback (RLHF) during fine-tuning to limit the harmful content the model can produce. Open models, meanwhile, are more likely to be deployed without these safeguards.

On the other hand, open-source AI proponents argue, as highlighted in Mozilla’s Joint Statement on AI Safety and Openness with 1,800+ signatories, that increasing access to foundation models will ultimately make them safer, thanks to increased transparency, scrutiny, and knowledge sharing.

Closed-source developers lead the private market in equity funding

The private market is also split, with closed developers leading in equity funding.

While both Mistral AI and xAI are proponents of open-source, both of their flagship models are currently closed.

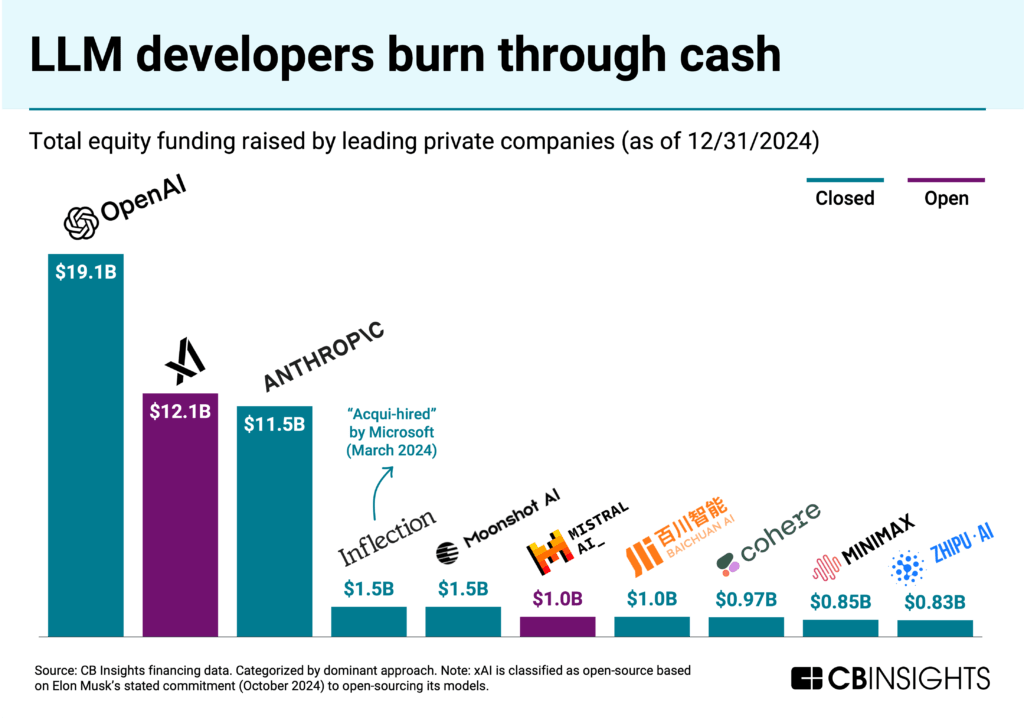

The cost to develop frontier models — taking into account hardware, staffing, and energy consumption costs — is growing 2.4x per year. This is driving the fundraising race.

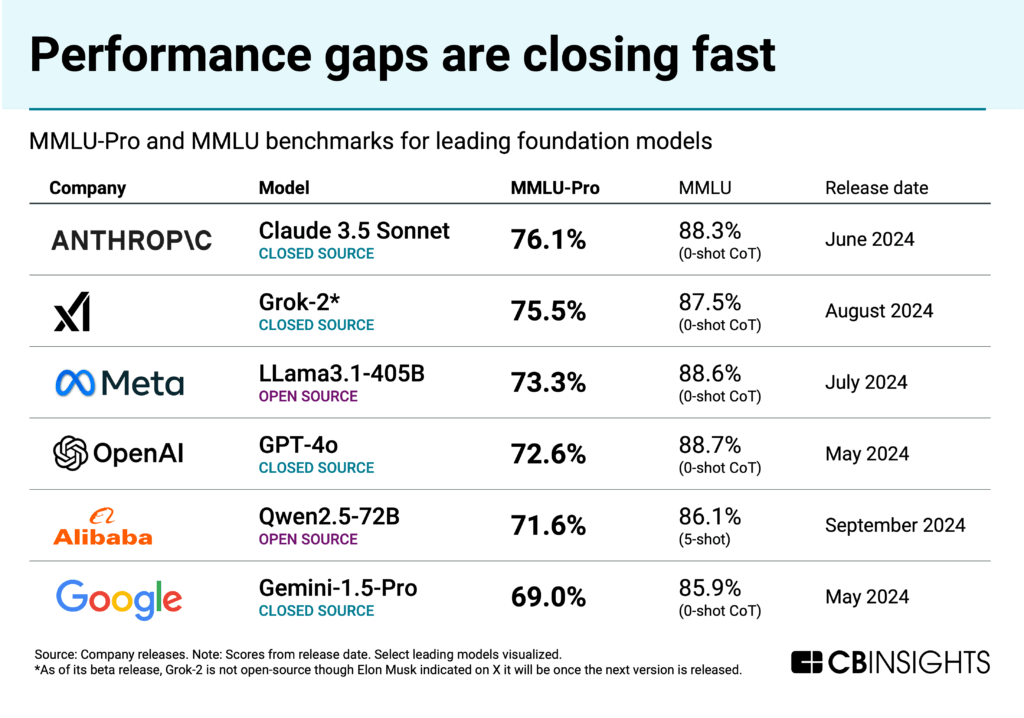

Performance gaps converge, with largest companies’ models topping leaderboard

Leading open-source models, like Meta’s largest Llama model, are making their way onto the MMLU leaderboard — a test that evaluates a language model’s knowledge and reasoning skills. The expanded version, MMLU-Pro, includes more challenging questions to assess advanced reasoning capabilities in AI models.

At the same time, proprietary models continue to outpace open-source ones by several months in terms of release dates.

The leaderboard itself is dominated by the largest companies in both big tech and the private market, indicating market consolidation at the frontier level.

At this stage, a16z partner Marc Andreessen has posited we could be approaching a “race to the bottom” — a future point where there are no moats for foundation models, and open-source performance is on par with closed-source. This has come into focus in recent months as frontier labs like OpenAI and Google have focused on smaller model development and other products (like agents) as performance gains slow and as release dates for the largest models (such as a potential GPT-5) get pushed back.

Below we look at how revenue and adoption gaps in the private market also point to increasing consolidation.

Revenue and investment gaps threaten open-source model developers’ viability in the private market

OpenAI dominates LLM adoption and revenue, followed by Anthropic

As LLM developers burn through cash, the focus has shifted to customer adoption — and revenue.

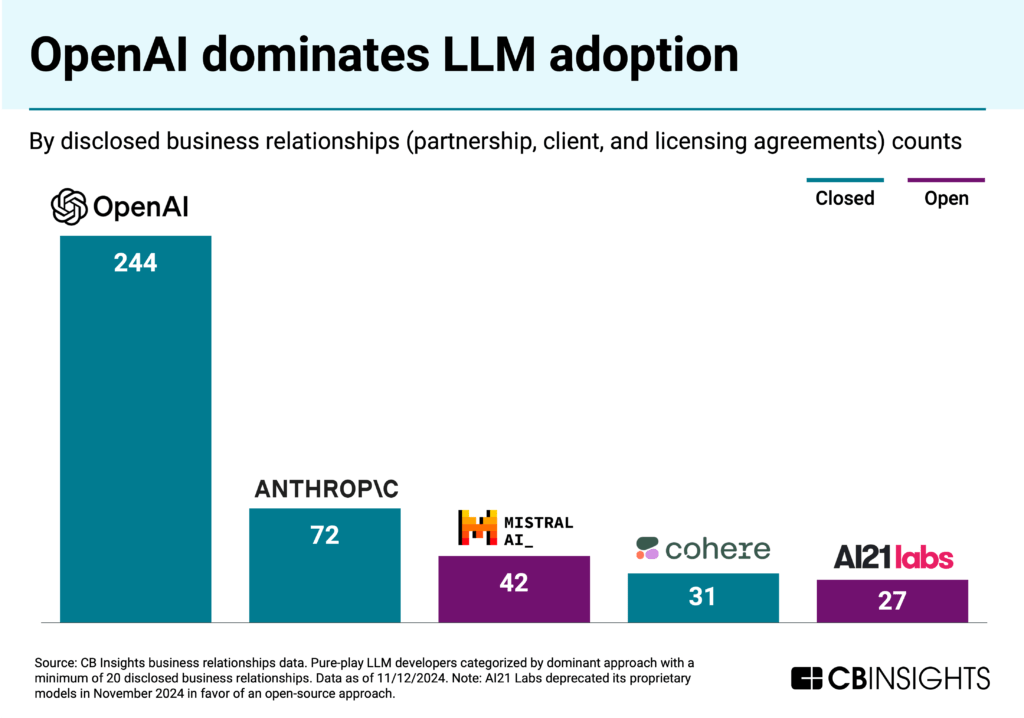

Based on CB Insights business relationship data, OpenAI is far ahead of its peers in terms of its disclosed partnerships and client relationships.

This business relationship analysis is limited to publicly disclosed partnership, client, and licensing agreements for pure-play model developers to highlight adoption trends. Relationships are not exhaustive and are directionally representative of trends across model developers’ partner and client relationships.

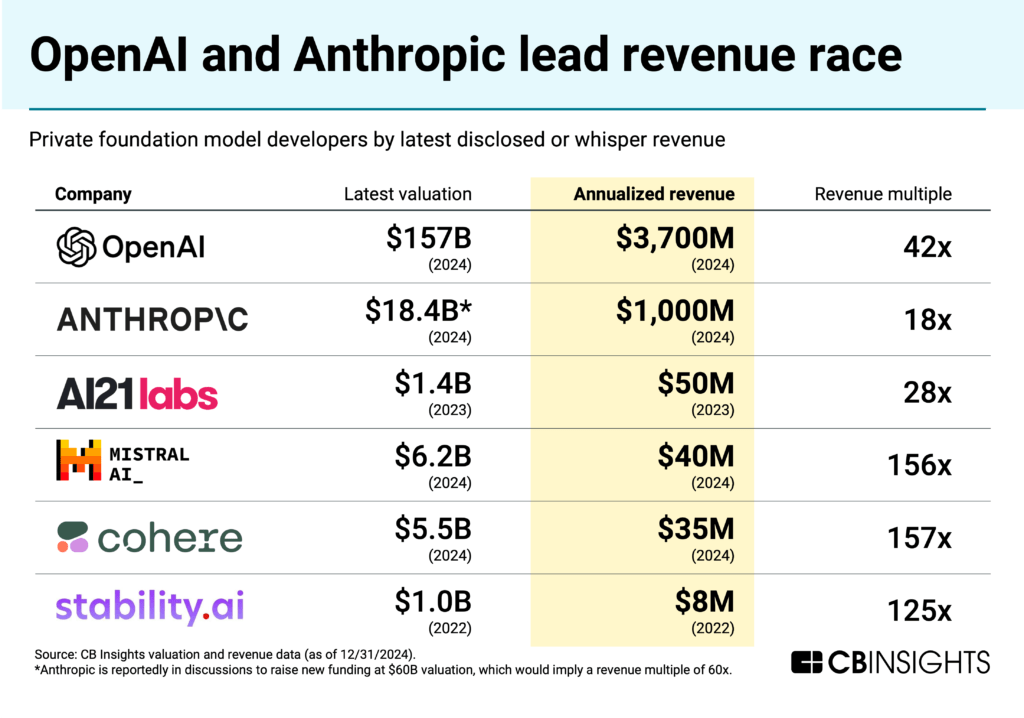

In terms of revenue, OpenAI leads, with projections of $3.7B in annual revenues for 2024 and $11.6B for 2025. However, it’s also been burning cash: the company projected midway through the year that it would lose $5B in 2024.

Open-source’s path to revenue remains unclear

While revenues for open-source model developers are not publicly available in most cases, reports suggest revenue generation is more limited — especially given the competition from Meta’s Llama.

The embattled Stability AI reportedly generated $8M in 2022 and less than $5M in the first quarter of 2024 (while losing over $30M). In June 2024, it secured an $80M funding deal that included the forgiveness of $100M in debts owed to cloud providers and other suppliers.

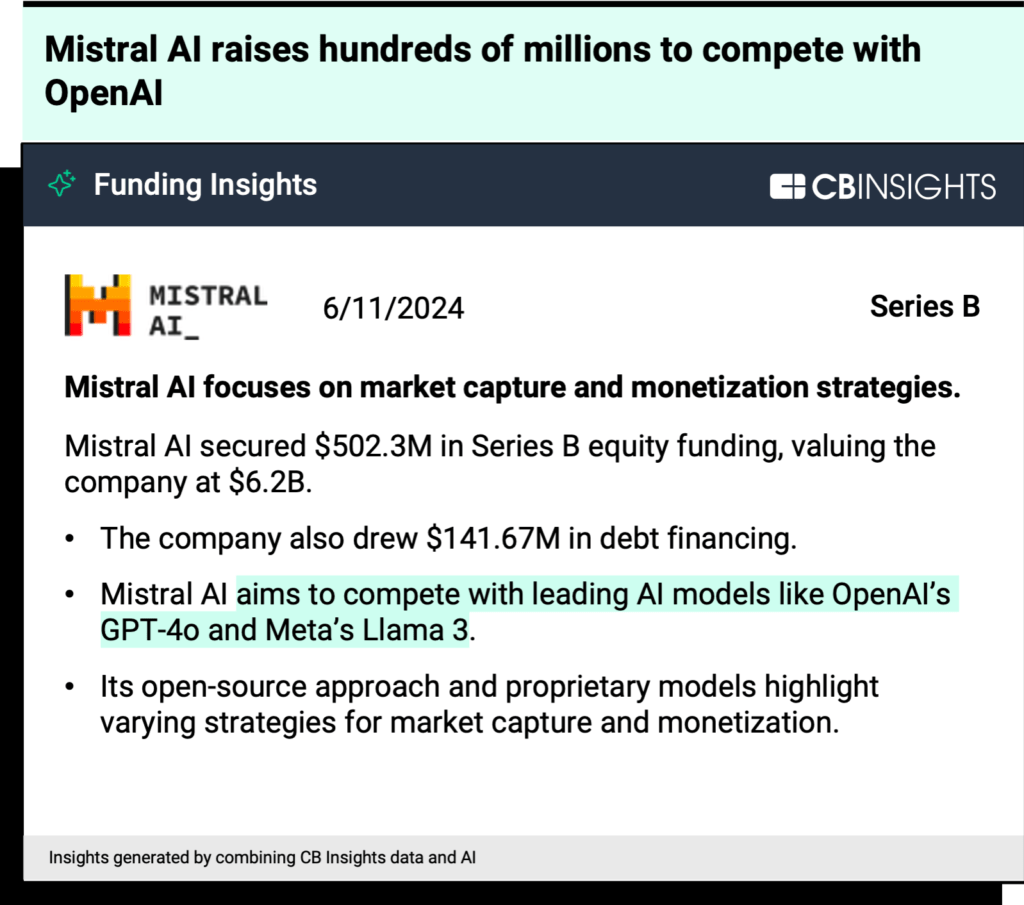

Meanwhile, Mistral AI has an unclear path to revenue, per The Information reporting — it sells access to its API, and under 10% of its users pay for Mistral’s larger commercial models through partners. Most of its smaller, open-source models are free.

Source: CB Insights — Mistral funding insight

Following the traditional approach to monetizing open-source businesses — building paid support offerings or tools (plugins, security, migration, apps on top) around the open-source core — some model developers are now building more enterprise capabilities into their platforms.

For example, Databricks offers security and other paid support services around its open-source LLM, DBRX. Similarly, Aleph Alpha launched in August 2024 a “sovereign AI” platform designed to help corporations and governments deploy LLMs (not necessarily its own) with added control and transparency features to serve the European market.

Investors hedge their bets

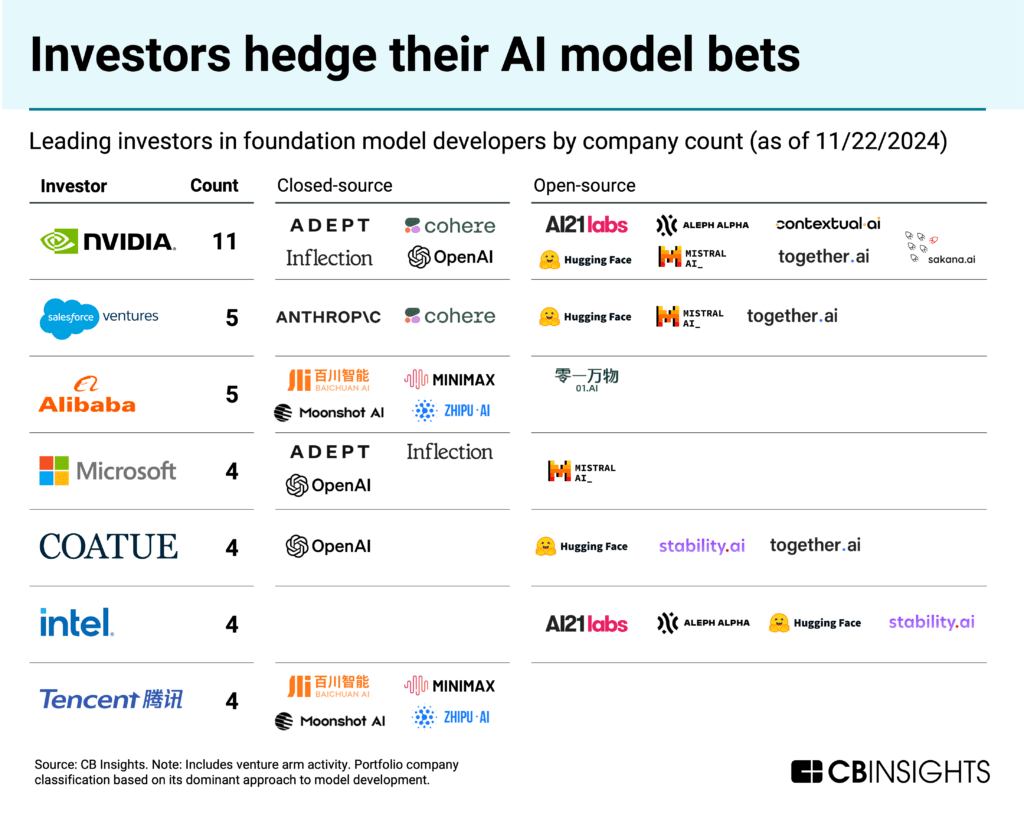

Most leading investors in private foundation model developers have backed companies developing both closed and open models.

Corporate investors figure heavily — Nvidia, Alibaba, and Microsoft, for example, have offered computing power and funds for development. These investments are aimed at feeding their core business focuses, such as AI chips and cloud computing. AWS, Azure, and Google Cloud all host both open and closed models.

Venture investors are taking sides:

- Coatue, the leading VC by unique companies backed, has called open source “the heartbeat of AI.” It’s taking a complementary approach: “We see open-source models as firmly having a place alongside proprietary ones.”

- a16z’s founders are proponents of open-source models, arguing that their transparency and accessibility will help ensure that AI is developed securely and ethically. In 2024, the two largest a16z-backed AI deals went to open-source LLM developers xAI and Mistral AI.

- Meanwhile, Founders Fund partner John Luttig has argued that the future of foundation models is closed-source. Khosla Ventures’ Vinod Khosla (a backer of OpenAI) also argues in favor of closed-source AI for safety reasons.

The investor split reflects uncertainty over which ecosystem will dominate and where the greatest value creation will occur. The relative difference in funding totals ($14.9B in equity funding to open-source model developers vs. $37.5B to closed-source), as well as the data available on revenue, suggests that a closed approach for private developers appears poised to win out, especially given the most performant open models at this point are from big tech leaders.

Smaller models drive open-source adoption

A wave of smaller foundation model players will move away from frontier model development

The conditions of a) high compute costs, b) limited moats, and c) competition from big tech have created a market ripe for a shake-up.

We’re seeing a wave of smaller foundation model players:

- Collapse into big tech: Adept, Inflection, and Character.AI have all essentially been “acqui-hired” by big tech companies, with founders and large portions of teams joining the acquirers. These deals reflect the high costs of model development, with licensing payments often directed to investors.

- Paywall frontier models: Some open-source AI developers now sell access to premium models while keeping basic versions free — similar to strategies used by big tech. For example, Mistral AI’s flagship model Mistral Large is built for commercial use (not open-source) and is available on Azure in partnership with Microsoft.

- Focus on smaller, open-source models: Developers like Germany-based Aleph Alpha and Israel-based AI21 Labs have shifted in 2024 from competing on general-purpose LLMs to building lighter-weight, optimized models and related AI tools. These models are open-source, with paid services layered on top.

Market bifurcation accelerates

Based on these trends, the AI model market is splitting into two tiers:

- Frontier models are largely dominated by closed-source offerings from well-funded players (OpenAI, Anthropic, Google), which can sustain growing compute costs. Meta’s Llama remains the most notable open-source alternative.

- Smaller models, optimized for specific use cases or edge deployment, are supported by a growing open-source ecosystem. These small language models (SLMs) have fewer parameters than LLMs, making them cheaper to train and easier to run.

Industry leaders are releasing smaller, open-source models to advance research efforts and to promote edge applications: Google with Gemma, Microsoft with Phi, and Apple with OpenELM.

For example, Microsoft highlighted in a recent earnings call:

“We have also built the world’s most popular SLMs, which offer performance comparable to larger models but are small enough to run on a laptop or mobile device. Anker, Ashley, AT&T, EY, and Thomson Reuters, for example, are all already exploring how to use our SLM Phi for their applications.” — Satya Nadella, CEO of Microsoft, Q2’24 Earnings Call

Meanwhile, of the 11 private SLM development platforms we identified, roughly half are already in the process of deploying their products.

Smaller, open models are also gaining traction in sectors like financial services and healthcare, where keeping sensitive data on-premises can be a need.

For example, a VP of machine learning at a health insurance company needed a solution for training healthcare models and looked to Hugging Face’s open-source library. In our May 2024 conversation, the buyer highlighted the opportunity of SLMs for their use case:

“I really think small language models are the future. You don’t need these huge proprietary LLMs for the vast, vast majority of use cases that you’re dealing with, especially some of the administrative burden in healthcare that we deal with.”

VP of Machine Learning,

Publicly traded multinational health insurance company

For now, it’s clear a hybrid approach is winning with enterprises: they will look to closed-source frontier models for the most sophisticated applications and open-source smaller models for edge and specialized use cases.

For information on reprint rights or other inquiries, please contact reprints@cbinsights.com.

If you aren’t already a client, sign up for a free trial to learn more about our platform.